Why 95% of AI Projects Fail — and How Causal AI + TLADS Can Change the Game (Part 1)

MIT recently issued a sobering report: 95% of Generative AI projects are failing to deliver material business or operational value. YIKES!! The findings spotlight four recurring traps:

Technology-first mindset: chasing algorithms instead of outcomes.

Correlation without causation: predicting patterns without knowing why.

No collaboration to harvest subject matter expertise and tribal knowledge.

Lack of continuous learning: one-off pilots instead of adaptive systems.

The most alarming? The overreliance on correlation-driven predictions. When AI tells you what is likely to happen but not why, leaders are left guessing at interventions. Without understanding cause-and-effect, organizations can’t take reliable preventative or corrective action.

To salvage these massive AI investments, leaders must broaden their perspective. Causal AI is the missing piece—a way to uncover the drivers behind outcomes and identify which interventions actually change results.

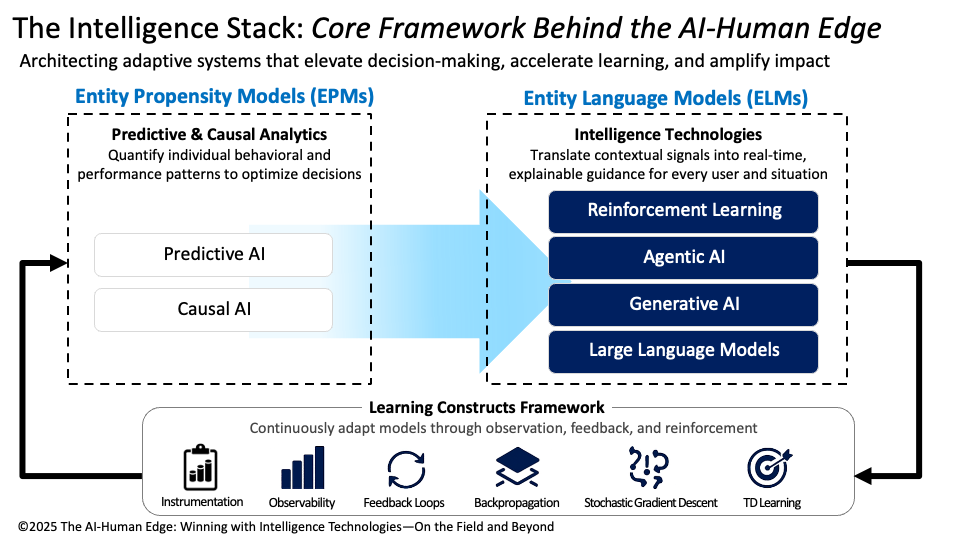

In my upcoming book, The AI-Human Edge, I describe how to build Entity Propensity Models (EPMs) that combine predictive and causal analytics as the “brains” of intelligence systems, pair them with Entity Language Models (ELMs) as the “voice and body” that deliver contextual, adaptive guidance through Generative AI, Agentic AI, Reinforcement Learning, and LLMs/RAG, and connect it all with a Learning Constructs Framework (instrumentation, observability, feedback loops) that serves as the “nervous system” for continuous improvement (Figure 1).

Figure 1: The Intelligence Stack that Enables the AI-Human Edge

But algorithms alone aren’t enough. To build trustworthy and actionable causal systems, we need a structured way to harvest SME knowledge, prioritize use cases, and tie analytic insights back to real-world decisions. That’s where the Thinking Like a Data Scientist (TLADS) methodology comes in.

To make this practical, we’ll walk through a real-world scenario: reducing patient falls in hospitals. Using TLADS, we’ll design a Fall Risk Score that is not only more accurate, but also more explainable and actionable for frontline caregivers.

Let’s jump into the exercise.

TLADS Step 1 & 2: Frame the Initiative and Harvest Stakeholder Expertise

Falls in hospitals represent both a clinical challenge and a financial burden. They can extend hospital stays, trigger legal exposure, invite penalties from insurers, and erode patient trust. From a healthcare leadership perspective, reducing falls supports the “Triple Aim” of healthcare: improving outcomes, enhancing the care experience, and lowering costs.

But preventing falls is an ecosystem challenge. Different stakeholders bring different priorities. For nurses, the need is clear signals they can trust during busy shifts. Administrators focus on liability reduction and safety ratings. Families want reassurance that their loved ones are safe. And patients value autonomy and dignity—they don’t want “fall prevention” to mean restrictive care.

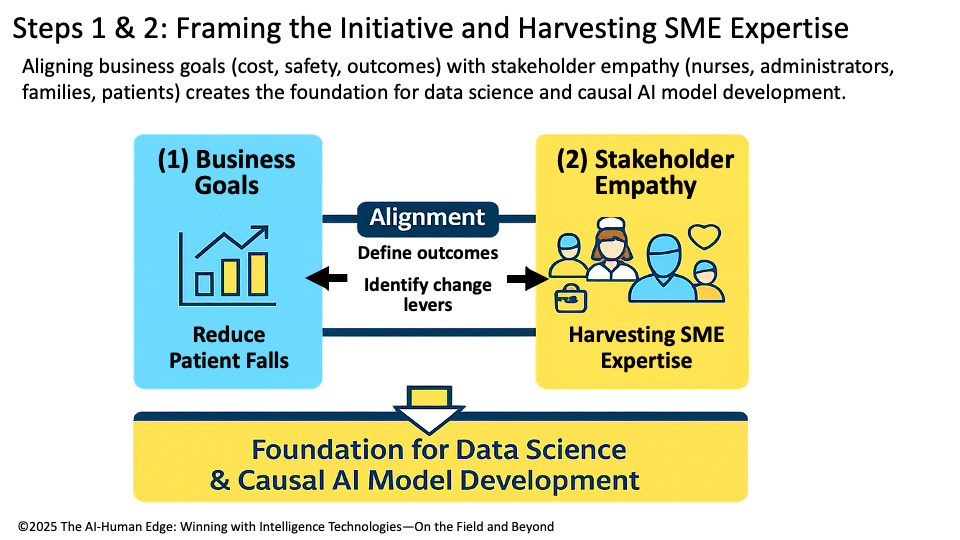

This is where the TLADS methodology provides critical structure. In Step 1 (Assess Business Initiative) and Step 2 (Empathize with Stakeholders), we determine the operational framing that defines success (reduced falls, improved ratings, lower liability), while identifying the metrics against which the desired outcomes’ success and effectiveness will be measured (Figure 2).

Figure 2: Steps 1 & 2: Framing the Initiative

💡 Causal AI Lens – TLADS Steps 1 and 2

TLADS Steps 1 and 2 prepare us to deliver relevant Causal AI outcomes by aligning the “why” (business and operational goals) with the “how” (stakeholder insights and tribal knowledge). Causal AI thrives when it has clear desired outcome metrics and rich, context-specific expertise to test. With this foundation, we move from simply predicting risk to identifying the levers of change that stakeholders can act upon with confidence.

TLADS Step 3: Model Business Entities

At the heart of any causal model is the entity we’re trying to understand and support. In this case, the entity is the patient. To model fall risk accurately, we need to capture not just who the patient is, but the rich set of attributes and contextual factors that shape their hospital experience.

Some of these attributes are straightforward: age, medication regimen, mobility level, and cognitive status. Others are situational: the nurse-to-patient ratio at a given time, the time of day when most falls occur, or a history of prior falls. And then there are contextual factors that live outside the patient but still shape their risk—environmental hazards like slippery floors, whether staffing levels dip during a shift change, or the presence of family members who can assist.

This is where Entity Propensity Models (EPMs) shine. By organizing these attributes and contexts into a living model of the patient, we’re able to move beyond generic fall prevention protocols toward highly individualized risk scores. This step is also where the AI-Human Edge journey begins—placing the patient at the center of a causal, adaptive system that learns and improves over time.

💡 Causal AI Lens – TLADS Step 3

Causal AI turns the entity model into a living map of cause-and-effect, showing how patient attributes, environment, and interventions combine to influence outcomes. We see how sedation, staffing, and mobility interact to raise or lower risk. This causal map makes the model both explainable and actionable, highlighting the levers of change that matter most for each patient.

TLADS Step 4: Identify & Prioritize Use Cases

Once we’ve modeled the patient, the next question becomes: Where will this model actually make a difference? Hospitals are full of potential analytics projects, but not every idea justifies the time, investment, or cultural energy it takes to make it work. The organizational discipline here is to identify and prioritize the use cases where analytics can drive measurable impact.

For fall prevention, one of the highest-value use cases might be to “Improve Fall Risk Identification and Interventions Effectiveness”. This use case would require the creation of a Daily Fall Risk propensity score that can feed directly into care team huddles or electronic dashboards, highlighting which patients require closer monitoring, medication adjustments, or a sitter assignment. By focusing on this specific and actionable use case, the hospital ensures that analytics become a trusted part of frontline decision-making, rather than yet another unused report sitting on a shelf.

💡 Causal AI Lens – TLADS Step 4

Causal AI sharpens prioritization by revealing which interventions will actually reduce falls. Instead of spreading precious resources across every possible risk factor, it identifies the high-impact levers, such as whether lowering nighttime sedatives or increasing rounding frequency during shift changes has the most significant effect on reducing falls. By focusing on the interventions with the most substantial causal impact, the hospital aligns analytic resources with the actions that matter most. This ensures the fall risk score drives not only clinical improvements but also operational value and a better patient experience.

Part 1 Summary: Framing and Assessing the Initiative

By walking through TLADS Steps 1–4, we’ve seen how to frame the initiative around business and clinical outcomes, empathize with stakeholders to surface tacit expertise, model the patient as the central entity, and prioritize the use cases where analytics can deliver the most impact. These early steps are critical because they shift the work away from “technology-first” thinking toward outcome-first design, grounded in cause-and-effect reasoning.

This foundation directly addresses the failure traps MIT highlighted:

Technology-first mindset → replaced with outcome-driven framing.

Correlation without causation → replaced with causal reasoning to uncover drivers.

No collaboration with subject matter experts → replaced with TLADS workshops that harvest tribal knowledge.

Lack of continuous learning → replaced with an architecture designed for feedback and adaptation.

By reframing how we start, TLADS and Causal AI ensure the Fall Risk Score won’t just be technically sound — it will be trusted, explainable, and actionable in daily hospital operations.

In Part 2, we’ll move deeper into the mechanics. We’ll show how brainstorming features (Step 5), applying causal modeling techniques (Step 5), and exploring causal algorithms (Step 6) bring computational power to the framework. We’ll then tie it all together with Steps 7 and 8, demonstrating how causal scores translate into real-world decisions and continuously improve through learning constructs. Finally, we’ll return to the MIT study and show how TLADS + Causal AI directly solves the four recurring traps that sink 95% of AI projects.